Key Takeaways:

- Figure 01 demonstrates remarkable adaptability, learning to prepare coffee through observation and engaging in human-like conversation.

- Integration with OpenAI’s technology empowers Figure 01 to respond to inquiries, showcasing advancements in robotics and natural language processing.

- The robot’s ability to perform simple tasks and articulate responses underscores advancements in mechanical engineering and real-time language processing.

- Precision in motor control and manipulation enables Figure 01 to execute tasks with dexterity, akin to human actions.

- The integration of cutting-edge technology exemplifies progress in equipping machines with the capacity to understand and communicate in natural language.

Figure 01 acquired the skill of preparing coffee through observational learning from a human counterpart, and presently, it possesses the ability to engage in conversation akin to a human being.

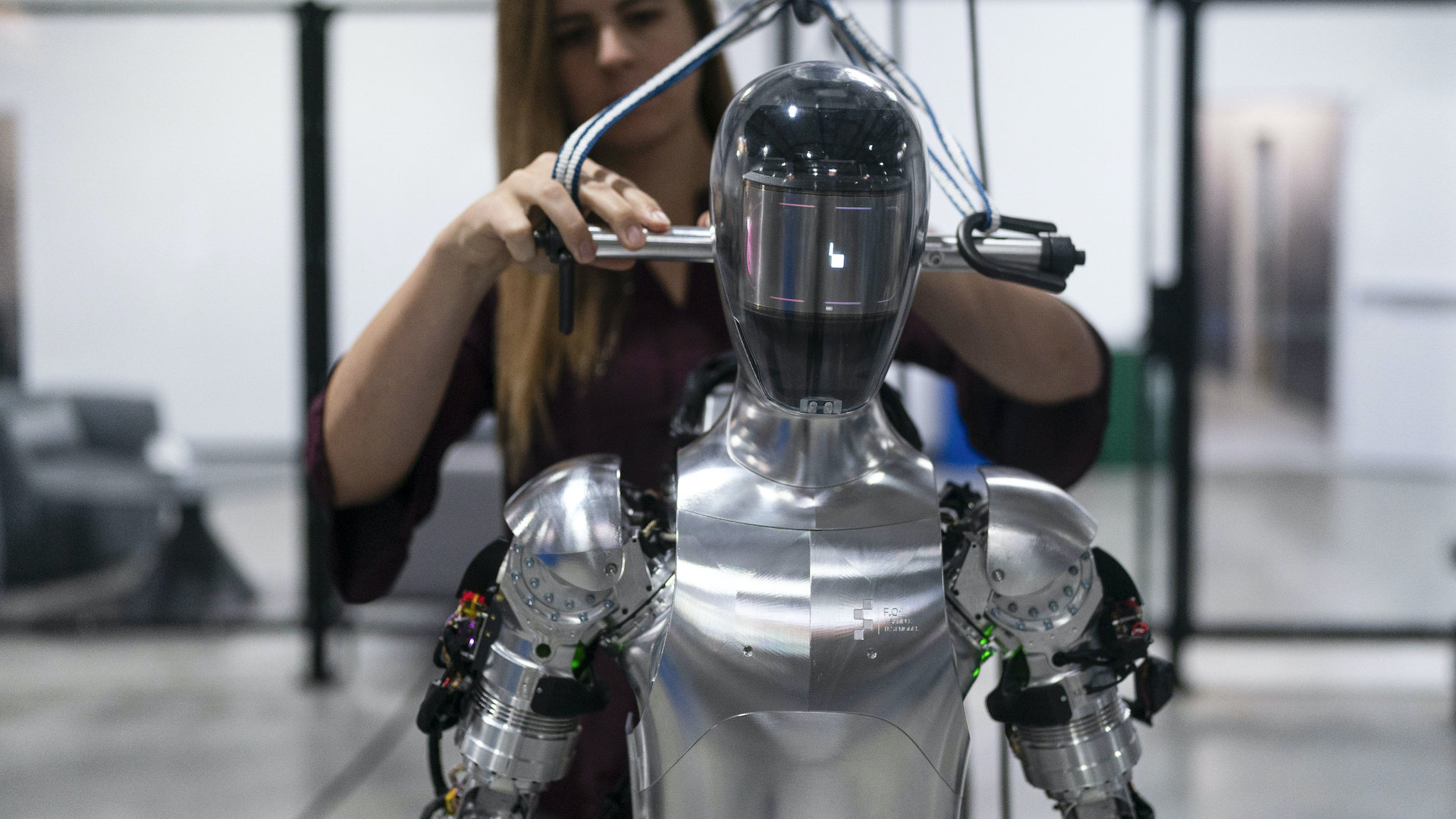

An autonomous humanoid robot, which autonomously acquired the proficiency of preparing a cup of coffee merely by observing a human demonstration, now possesses the capability to respond to inquiries, courtesy of its integration with OpenAI’s cutting-edge technology.

In a recent promotional presentation, a technician prompts Figure 01 to execute various uncomplicated tasks within a simplistic assessment setting resembling a kitchen environment. Initially, he requests sustenance, prompting the robot to offer him an apple. Subsequently, he queries Figure 01 regarding the rationale behind the apple gesture while it was concurrently disposing of some refuse. Despite its robotic demeanor, Figure 01 articulates responses in a genial tone.

The organization elaborated in its promotional material that the conversational abilities are facilitated by an integration with technology developed by OpenAI, the entity responsible for ChatGPT. However, it appears improbable that Figure 01 employs ChatGPT directly, as it utilizes filler expressions like “um,” which are not typical of ChatGPT.

Should the functionality depicted in the presentation materialize as depicted, it signifies a notable advancement in two fundamental domains of robotics. As elucidated by experts in a previous discussion with Live Science, the first advancement concerns the mechanical engineering underlying nimble, self-correcting maneuvers reminiscent of human actions. This entails precise manipulation of motors, actuators, and grippers inspired by biological joints or muscles, in addition to the motor coordination required to execute tasks and handle objects with finesse.

Even a seemingly mundane task such as grasping a cup necessitates intricate on-board computation to coordinate muscle movements in a precise sequence.

The second notable advancement pertains to real-time natural language processing (NLP), made possible by the incorporation of OpenAI’s engine, which must exhibit responsiveness akin to ChatGPT when processing textual queries. Additionally, it requires software capable of converting this processed data into audible speech. NLP constitutes a branch of computer science dedicated to equipping machines with the ability to comprehend and articulate human speech.